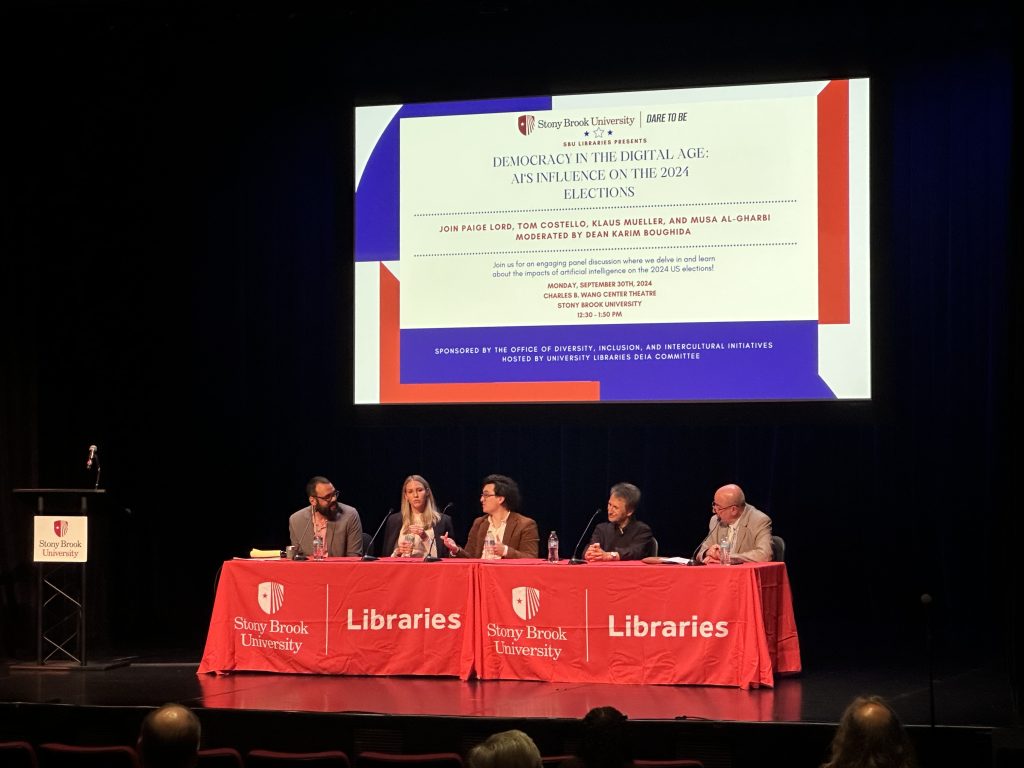

On September 30th, 2024, Paige Lord, Tom Costello, Musa Al-Gharbi, Klaus Mueller, and Dean Karim Boughida discussed Democracy in the Digital Age at the Wang Center Theatre.

Paige Lord is an expert in AI Ethics with a focus on its impact on policy and society. She is the creator of the AI Ethics & Policy Insights newsletter, and has contributed to works like the Top 20 Controls for Responsible AI, and regularly shares her insights on TikTok (@aiethicswithpaige). Paige works at GitHub where she leads the go-to-market strategy for AI and responsible AI products and features, including the recent launch of GitHub Models. Her thought leadership has been featured in Wired Magazine. She holds a master’s degree from Harvard University with a focus on AI and privacy law and is committed to shaping the next wave of technology policy in a way that prioritizes human flourishing. During the panel, Paige questioned the facial recognition at TSA because there’s no transparency to how our data is being used. Paige believes that a lot of misinformation has spread throughout social media during this election. She actively debunks them through her TikTok platform. Paige also spoke about watermark and audio fabrication, as regulations for these are very broad and difficult to differentiate.

Tom Costello, Ph.D. is an Assistant Professor at American University of Psychology. Tom studies where political and social beliefs come from and also works with AI to mount persuasive papers together. According to AI, in this election, it is two percent toward Trump as opposed to Harris. He mentioned that spearfishing attacks will affect voting outcomes. Elections will have synthetic misinformation through bots. The downside of AI is that when one candidate loses, they will blame it on AI and question the validity of the election.

Klaus Mueller, Ph.D. is an Assistant Professor in the Computer Science Department at Stony Brook University. He is also a senior scientist at the Computational Science Initiative at Brookhaven National Lab and presently serves as the Interim Chair of the Stony Brook Department of Tech and Society. His current research interests are visual analytics, explainable machine learning and AI, algorithmic fairness and transparency, data science and computational imaging. He won the US National Science Foundation Early Career Award and the SUNY Chancellor Award for Excellence in Scholarship and Creative Activity. In 2018 Klaus was inducted into the National Academy of Inventors. To date, he has authored more than 300 peer-reviewed journal and conference papers, which have been cited more than 14,000 times. He was the Editor-in-Chief of IEEE Transactions on Visualization and Computer Graphics from 2019-2022. Klaus is a Fellow of the IEEE. During the panel, Klaus spoke about how he sees the crisis of misinformation and believes in conspiracy theories because people are always missing the mediation of all means. For example, the sun doesn’t directly cause wildfires. There are many factors in between that need to happen for it to cause a fire. They may have different personalities, but they might also have similar responses.

Musa al-Gharbi, Ph.D. is an Assistant Professor of Communication and Journalism at Stony Brook University. He’s also a writer and explores the sociology of knowledge. His view on this topic is that people are subjected to temptation when they do not agree with something, and this causes them to believe in the misinformation that is spread online. Although the book by Hugo Mercier, entitled Not Born Yesterday demonstrates that people are not prone to being manipulated by others, there are many factors that cause them to believe in fake news. One of them includes the temptation to believe and create fake news when they are losing or when they disagree with the results. In this digital age, people often use social media to exaggerate information that may not be true.

AI is a powerful tool affecting modern day elections. People tend to polarize, and AI is always readily available to us. This means people can just take their thoughts and broadcast it on the media with minimal skills. It can move people whose positions might be in the middle and gain supporters for each side. In addition, celebrity endorsements are often seen today which can also influence voters to get the sense that “my favorite celebrity is voting for this side, so I will do so too”. The spread of misinformation is easily done just because they are partisan, so it does not matter as long as they can make the opposing side look bad.

It is undeniable that people are polarized. One strategy to gain views on videos is to spread fake information. An example would be a video of Kamala Harris being edited as if she is saying something, but individual words were cut from various speeches. Although though viewers know that the video is fake, it still does not prevent it from being shared widely, thus gaining more views.

This panel event was hosted by University Libraries and sponsored by the Office of Diversity, Inclusion, and Intercultural Initiatives (DI3).

This post was written by Vicky Huang, Health Sciences Library Student Assistant.

Sunny Chung

Latest posts by Sunny Chung (see all)

- Health Tech Fair 2025 - September 23, 2025

- Health Tech Fair 2025: Save the date! - August 25, 2025

- Michael Huang Receives CALA Outstanding Leadership Award - June 17, 2025