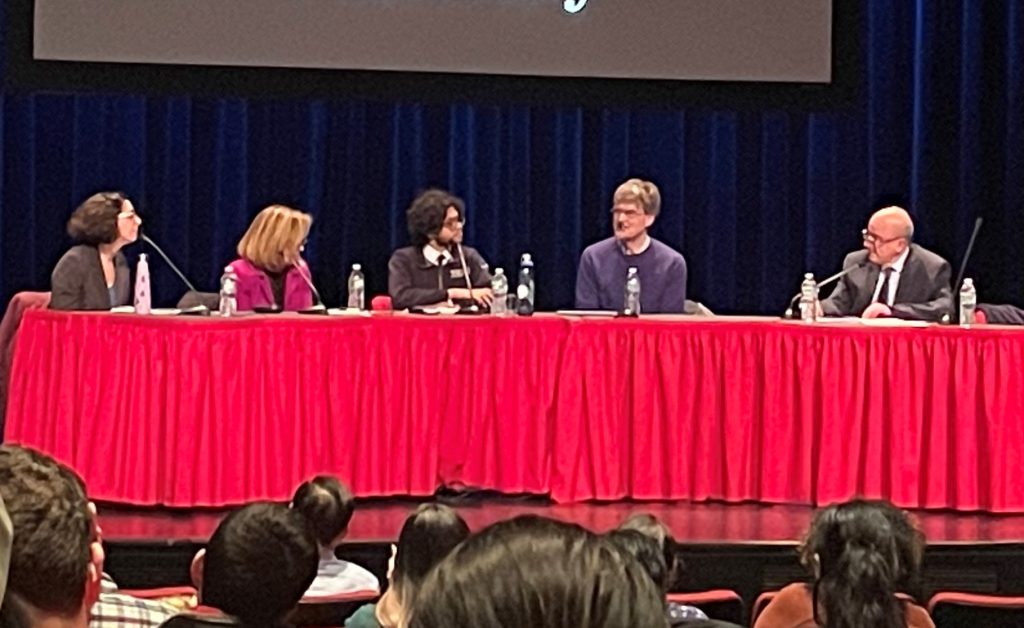

On March 8, 2023, University Libraries hosted ChatGPT & Generative AI: An SBU Forum. Dean Karim Boughida moderated a faculty, staff, & student panel with interest and expertise in large language models (LLM) and how they might intersect with higher education:

- Susan Brennan from Psychology, Linguistics, and Computer Science

- Sowad Ocean Karim, President of Undergraduate Student Government

- Owen Rambow from Linguistics and the Institute for Advanced Computational Science (IACS)

- Rose Tirotta-Esposito, Director of the Center for Excellence in Learning and Teaching (CELT)

Rambow provided a brief introduction to the concept of LLMs, explaining such terms and concepts as perceptrons, neural networks, transformers, and the differences between word-level predictions and sentence-level predictions in different language models. He explained that ChatGPT is an example of a “fine-tuned LLM,” whose tasks have been annotated, but there are many different examples of LLMs that have been in use by different communities for the past several years, and many more will emerge in the future.

Brennan then shared some examples of AI writing, human writing, how AI detectors might work (or not work), some studies of how AI writing compares to human writing within specific tasks, and how certain publications like Science and Nature are already addressing the use of AI within scholarly publishing. Brennan suggested that sensible policies need to focus on accountability: LLMs cannot think and they cannot be held accountable, but humans can. Because of the quickly changing nature of the technology, all policies should be re-evaluated frequently, perhaps every 6 months.

Tirotta-Esposito emphasized the importance of being open to different ways of integrating ChatGPT into the classroom, and on the value of transparency in faculty and student use. Integrating conversations about ChatGPT in class will show students that faculty know about the technology and also help students develop understanding in when and how to use or not use ChatGPT. Rethinking assignments and using authentic assessments are examples of useful strategies moving forward.

USG President Karim highlighted how ChatGPT can be helpful for students. One example is how students with stigmatized language dialects or language barriers might be able to use the technology to help with drafting throughout the writing process. He also suggested that ChatGPT might actually increase the potential for critical thinking because it makes it easier for a “further going out from what ChatGPT generates” – it gives students a starting point from where they can take ideas further and deeper.

The extensive Q & A brought forth voices from across campus, and a particular highlight of the event was the many different students who chimed in with thoughts and concerns. Ideas that emerged from the Q & A session included:

- Bias is a serious issue, as biases are perpetuated by AI

- LLMs are good at making beautiful sentences, but not reliable for content

- Source evaluation remains as critical as ever – if you don’t know or understand the source of information, then you should not rely on that information

- Accountability – evaluate multiple sources; Libraries have been instructing on information literacy through technology changes. AI won’t be different.

- In assignments, ask students to make choices and justify their choices; perhaps insist they include hyperlinks to all sources

- AI might be good at formulaic writing, but great works of art are often powerful because they break the formulas

- Deep fakes are a big problem; we will have to change how we weigh evidence and trust our senses

- Authenticity and technology enhancements; is authenticity lost when using ChatGPT?

- Stealing / intellectual property / copyright violation vs. cheating / being misleading; untransparent use of AI might not be the former, but it could be the latter

- Hidden costs of technology; ex: hidden human labor in the global south with data labelers

- A tool must represent itself properly as a tool; it is not capable of moral standards

- Brennan: intelligence requires “sensors, effectors, and biological constraints,” which AI does not have

Photo credit: Victoria Pilato

Christine Fena

Latest posts by Christine Fena (see all)

- Writing Tutors & Librarians Come Together to Support Student Research Writing - April 22, 2025

- Writing & Rhetoric, CELT, and University Libraries Join Forces to Foster Writing-to-Learn Skills with Critical AI Literacy - December 20, 2024

- Writing Tutors and Librarians Join Forces to Support Students - November 14, 2024